OK, time to make some more progress on connecting ground-based measurements with simulated analytic results from solaR. I’ve been stymied a bit by the last stages of solaR that translate top of atmosphere equations/readings for Bo0 to best case and realistic solar production. There are really 3 steps in between:

- Bo0, geometric, temperature and calibration measurements to earth surface measurements of G0 (GHI - global horizontal irradiance), D0 (DHI - direct horizontal irradiance)

- From those to Geffective and Deffective, based on the tilt and orientation of my fixed solar install

- And from those irradiances to actual solar production.

I really just want to get to Geffective and chart against my solar production. But right now, it’s not clear those steps are working the way I’m trying to use the package.

In the meantime, I dug up some local half-hourly solar measurements for my area courtesy of the NREL NSRDB resource. It’s a 2013 through 2015 record that I can use to compare my data against as well as use to calibrate and even feed solaR, if I can get it working. But for now I’m going to use to make sense of my solar data.

The first thing I attempted to do is chart measured GHI (G0) for this time period against my kWh production during the same period.

Wow ! That looks familiar. Another “wing” pattern. Looks very close to my Bo0 (calculated using solar geometry) vs. kWh, though a little more diffuse since it only contains 50K points instead of the 225K points in the original (the measured data is only half the data period and half the sample frequency - 1/4 as many points). If I compare against the Clearsky GHI it even looks closer to the Bo0 theoretical, because NREL’s Clearsky calculation removes the effects of clouds.

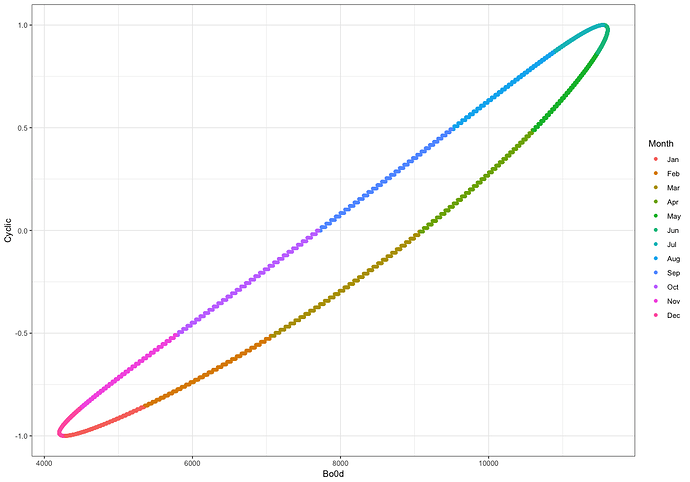

The same pattern exactly, including the “shadow wing” (of course), just compressed in the x dimension. Just for fun, let’s compare the Bo0 (theoretical calculated value) against the Clearsky GHI (a compensated ground measurement).

Very close to a linear relationship, with a little bit of a loop/hysteresis. I’m suspicious still that the loop may come from some timebase offset between the two measurements, even though they are charted based on the same measurement times. Just estimating, it looks like Clearsky GHI equals 1000, when the top of the atmosphere calculation gives 1250, so there’s about a 20% energy loss from the top to the bottom of the atmosphere without clouds at my latitude.

Two pieces of good news…

- Local ground data agrees with my solaR-based Bo0 calculations, so I should be able to use to calibrate. I might still need to take a look at what creates the loop between Bo0 and Clearsky GHI

- I’m encouraged to focus on the next two steps in the calculation process.

ps: The other thing that makes me want to look more closely at the loop between my calculated data (Bo0) and my ground based observation data (Clearsky DHI) is that the Zenith angle coming from each shows the same loop behavior in the early morning and late evening.

ZenithN is ground-based from the NREL data for latitude 37.65N and ZenithS produced by solaR is for latitude 37.453N. That suggests that either my timebase is off, or it might be caused by slight differences in longitude BTW-longitude differences = time) and latitude between the measurement points. More, soon…